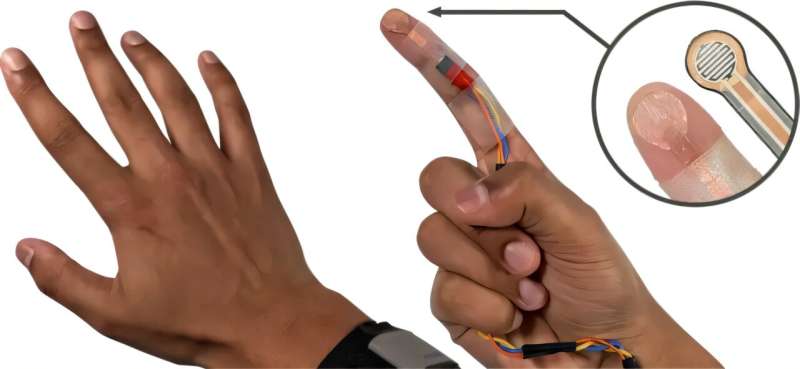

The researchers used a standard touch sensor that ran along the underside of the index finger and palm to collect data about different types of touch at different forces while being invisible to the camera. Credit: Carnegie Mellon University

Paper published in Proceedings of the 37th Annual ACM Symposium on Software and User Interface Technologyby researchers at Carnegie Mellon University’s Institute for Human-Computer Interaction, introducing EgoTouch, a device that uses artificial intelligence to control an AR/VR interface by touching its ‘ skin with a finger.

The team wanted to ultimately design a control that would provide tactile feedback using only the sensors that come with a standard AR/VR headset.

OmniTouch, a method previously developed by Chris Harrison, associate professor in the HCII and director of the Future Interfaces Group, came close. But that method required a special, clunky, depth-sensitive camera. Vimal Mollyn, Ph.D. student advised by Harrison, had the idea to use a machine learning algorithm to train conventional cameras to recognize touch.

“Try to take your finger and see what happens when you touch your skin with it. You will notice that there are these reflections and local skin deformations that only happen when you are touching the skin, Molly said. “If we see those, we can train a machine learning model to do the same thing, and that’s basically what we did.”

Mollyn collected the data for EgoTouch using a custom touch sensor that ran along the underside of the keyboard and palm. The sensor collected data on different types of touch at different forces while being invisible to the camera. The model then learned to correlate the visual properties of shadows and skin deformities to touch and force without human reference.

The team expanded their training data collection to include 15 users with different skin tones and hair densities and collected hours of data across multiple poses, activities and lighting conditions.

EgoTouch can detect touch with more than 96% accuracy and has a false positive rate of around 5%. It recognizes pushing down, lifting up and pulling. The model can also classify whether a touch was light or hard with 98% accuracy.

“That can be very useful for right-click activity on the skin,” Mollyn said.

By detecting contact variables, developers could simulate touchscreen movements on the skin. For example, a smartphone can recognize scrolling up or down a page, zooming in, turning right, or pressing and holding on an image. To translate this to a skin interface, the camera must recognize the subtle differences between the type of touch and the force of touch.

Accuracy was about the same across different skin tones and hair densities, and at different areas on the hand and forearm such as the front of the arm, back of the arm, palm and back of the hand. The system did not work well in bony areas like the worms.

“Maybe it’s because there wasn’t as much skin change in those areas,” Mollyn said. “As a user interface designer, what you can do is to avoid placing elements on these categories.”

Mollyn is investigating ways to use night vision cameras and night lighting to enable the EgoTouch system to work in the dark. He is also collaborating with researchers to extend this touch detection method to surfaces other than the skin.

“For the first time, we have a system that just uses a camera that is already in all the headphones. Our models are calibration-free, and they work out of the box,” Mollyn said. “Now we can take pre-worked skin interfaces and make them real.”

More information:

Vimal Mollyn et al, EgoTouch: On-body input using AR/VR headset cameras, Proceedings of the 37th Annual ACM Symposium on Software and User Interface Technology (2024). DOI: 10.1145/3654777.3676455

Presented by Carnegie Mellon University

Quote: AI-based tool creates simple interface for virtual and augmented reality (2024, November 13) Retrieved November 14, 2024 from https://techxplore.com/news/2024-11-ai-based- tool-simple-interfaces.html

This document is copyrighted. Except for any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.

#AIbased #tool #creates #simple #interface #virtual #augmented #reality